Feature allocation, probability functions, and paintboxes

Intro

- unsupervised learning

- canonical example, clustering

- what if objects are a part of multiple groups?

- feature allocation

- each group is now called a feature not a cluster

- Assumptions

- exchangeable

- finite number of features per datapoint (can’t have more animals than pixels in photo)

- Definitions:

- exchangeable partition probability function (frequency of features, exchangeable since the order doesn’t matter (cat/dog/mouse, mouse/cat/dog).

- feature case: chose features in proportion to their occurrence frequency.

- function of the number of data points as well as the frequencies of the features

- does every feature allocation have an exchangeable probability function? No

- Counterexample: not all feature sets with same number of data points and same number of features per data point have same probability.

Kingman Paintbox

- start with unit interval (1D), partition at random (countably infinite elements).

- draw randomly from this interval. If they are in the same partition, ID them in the same cluster

- so this is clearly an exchangeable partition — can reorganize the roles.

- Reverse is also true, if you have an exchangable partition, there is a corresponding partition interval (paintbox).

Add a twist: (feature paintbox)

- what if groups aren’t necessarily mutual exclusive? subintervals can overlap.

- so a uniform random draw can intersect multiple features.

- changing the order of the data points doesn’t matter (they came from a uniform random draw)

- The reverse we prove to also be true — for every feature map, there is a corresponding feature paintbox

- draw K Poisson. For each K draw a frequency of size q Beta distributed frequency size.

- How to allocate features for the Indian Buffet problem?

- sizes of sub-boxes are determined by their frequency

- what about their overlap? For every combination of feature 1, there is a dependent and indpendent fraction. For every combination of feature 1 and 2, there is a feature 3 overlap and non-overlap fraction.

are feature frequency models and EFPMs the same space of distributions?

- Yes all EFPFs can be represented by a feature frequency model and vice verca.

Recap

- feature paintbox is a characterization of exchangeable feature models

- Discussion of different classes and overlap of clustering/feature models exchangeable clusters

How do we learn a structure

- most popular unsupervised approach — K-means. (easy, fast, parallizable)

- Disadventages: only good for a specific K clusters.

- Alternative: Nonparametric Bayes

- Modular,

- flexible (K can grow as data grows),

- coherent treatment of uncertainty.

- not efficient on large data

MAD-Bayes perspective

- Inspiration

- finite gaussian mixture model.

- start with nonparameteric Bayes model, take a limit to get to a Kmeans like objective.

- kmeans — assign clusters to cluster centers, minimize distance to cluster centers.

- definitions: kmeans objective is the minimization of the euclidean distance.

- Approach: assign all data points (in parallel) to one of K clusters, measure distances. Iterate and minimize distance.

- Our model

- not just learn mean, but learn full probability distribution

- Our objective: Maximum a Posteriori distribution: maximize probability of parameters given data.

- analogies:

- Mixture of gaussians, k- means

- beta process – learn feature maps (?)

- example: each feature is a sum of Gaussian bumbs.

- if each data point belonged to one and only one cluster this returns k-means

- Algorithm

- assign each data point to features

- create new feature if it lowers the objective

- update feature means

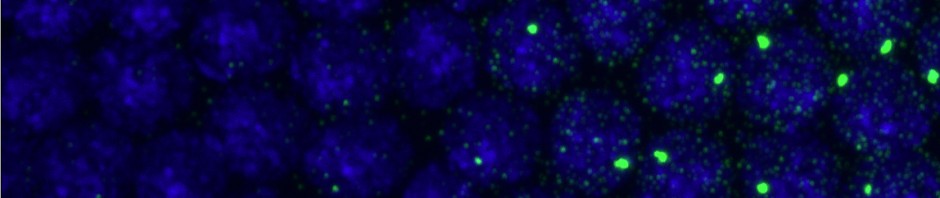

- example problem: pictures of objects on tables. Want to find features (ID items on tables).

- MAD-Bayes faster

- get closer annotation, can get perfect match all objects correct and correct number of features.

- what are we giving up?

- don’t enforce size distribution type

- don’t learn full posterior model. Don’t get systematic treatment of uncertainty.

- parallizing

- challenge, feature choice for each data points depends on current options of features.

- chose from current list, update in next step from all new created features.

Questions

- why do we care about getting the right number of means?

- alternative just chose something bigger than what we expect and not worry about the ‘dust’ of small things that get assigned their own clusters.

- reply: sometimes more aesthetically appealing not to cap. species discovery — get common ones first, have to look a lot to find infrequent ones.